Introduction

Watch Phil Harvey and Azure Dan work through analysing the Plays of Shakespeare using the Cognitive Services Text Analytics integration with Azure Data Lake Analytics.

In this video you will learn how:

- to plan out your solution

- we processed the plays of Shakespeare as data

- decided on your method of data ingest

- to provision Data Lake Store in the Azure Portal

- to provision Data Lake Analytics in the Azure Portal

- to install the Cognitive Services into Data Lake Analytics

- to run text sentiment against your data

- to link PowerBI to Data Lake Store

- to visualise sentiment data

- … maybe a bit about Shakespeare?

The Video

Follow Phil Harvey on Twitter: @codebeard

Follow Azure Dan on Twitter: @azuredan

Resources

In the video there are few resources called out.

The Complete Works of Shakespeare

This can be found on the MIT Open Courseware site.

Please read the licence carefully when using this data.

Data Ingest Options Flow Chart (Whitepaper)

The original white paper mentioned in the video is here (V2) Azure Data Platform Ingest Options V2.pdf to download and read. The main flowchart will take you through the choices you will need to make. This document contains a large number of considerations about where the data will go after ingest that might be useful.

As the world of cloud is ever changing to bring great new features to everyone the original paper will likely need updating on an ongoing basis. For example Data Ingest Flowchart V3 Draft.pdf is the current iteration of the flow chart.

Because of this I will be converting the document to a Citadel page so it is easier to keep up to date.

Regular Expression Documentation

In the video we mention the Regular Expressions documentation available on docs.microsoft.com and they’re are a great starting point to learn and refer back to. Regular expressions, like any skill, need to be practiced to develop comfort and ability. Extracting the plays of Shakespeare would make good practice indeed!

F# Scripts

There are 2 script files in scripts.zip that are presented for learning purposes. They are presented without warranty or guarantee. As commented on in the video they are not examples of good code. They do work on my machine though.

- getPlay.fsx is used to extract one play from the complete works file

- process2.fsx is used to turn the extracted play into the data files provided below

Once processed each line of the output files should conform to the schema given in the next sections and to this regular expression: ([^\|]+?\|){8}[\w\s,&]+?\|[^\|]+$. If they don’t conform to this regular expression the U-SQL script will throw and error.

Plays

As discussed we are uploading 5 processed plays here for you to use with the U-SQL Script below. These are:

The schema of these files is

play line number|play progress %|act line number|act progress %|scene line number|scene progress %|act|scene|character|line

U-SQL Script

The script used in the video is Analysis3.usql and can be used with the files in the section above.

This is a great place to start understanding the cognitive capabilities of Data Lake Analytics.

Power BI

Please sign up for PowerBI to be able to use this service and/or download the desktop application.

To load data from Azure Data Lake store this guide will help you get started.

The Results

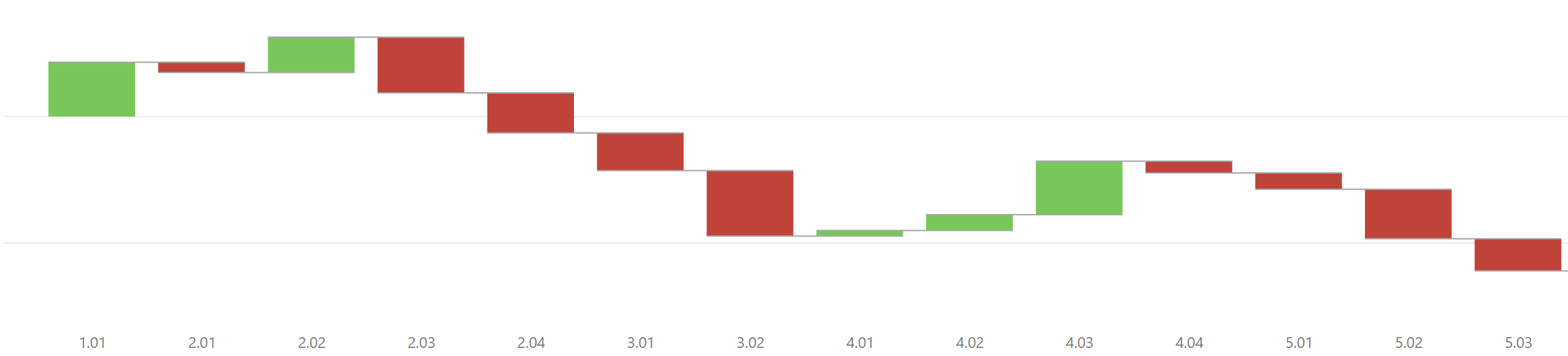

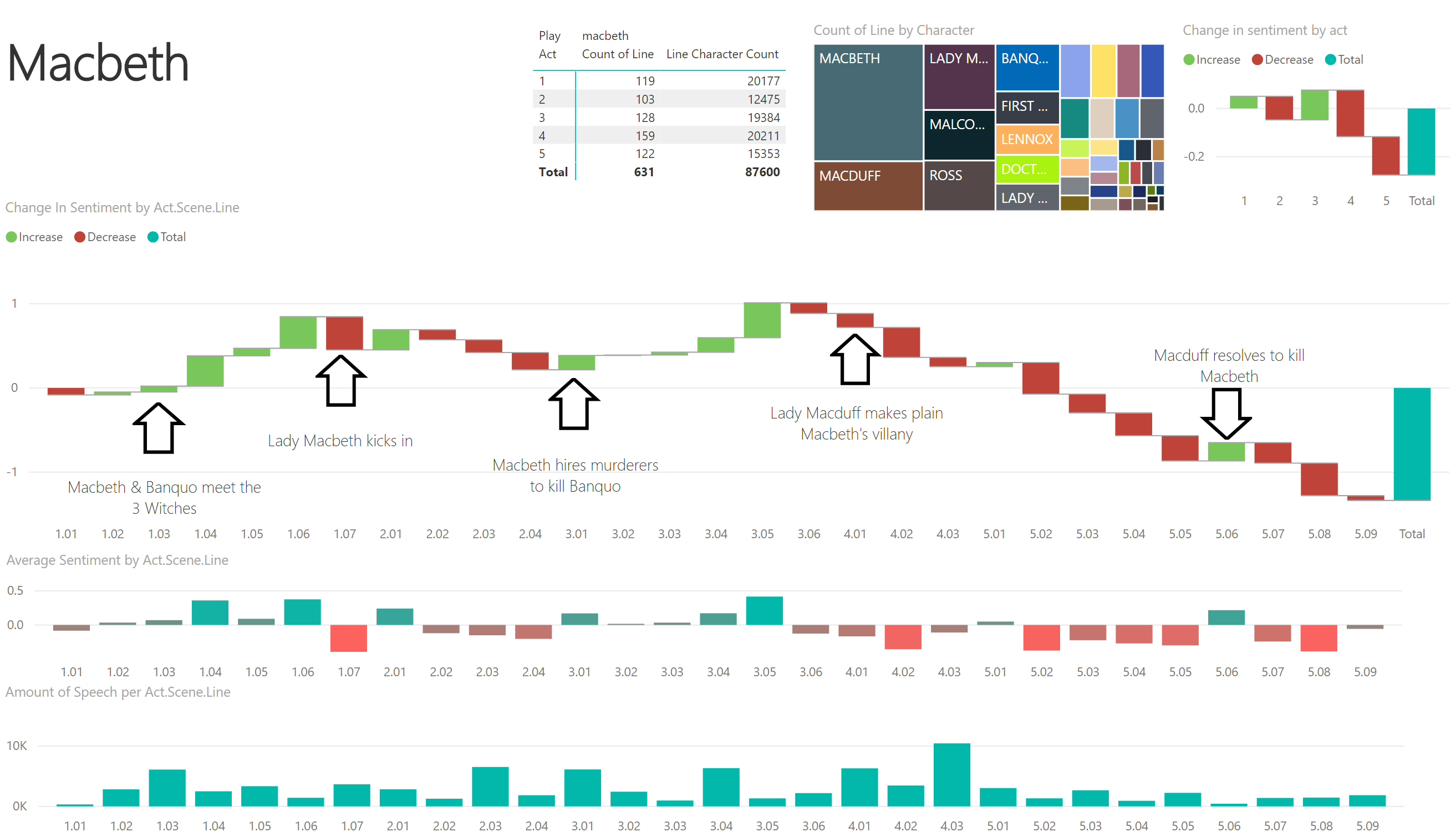

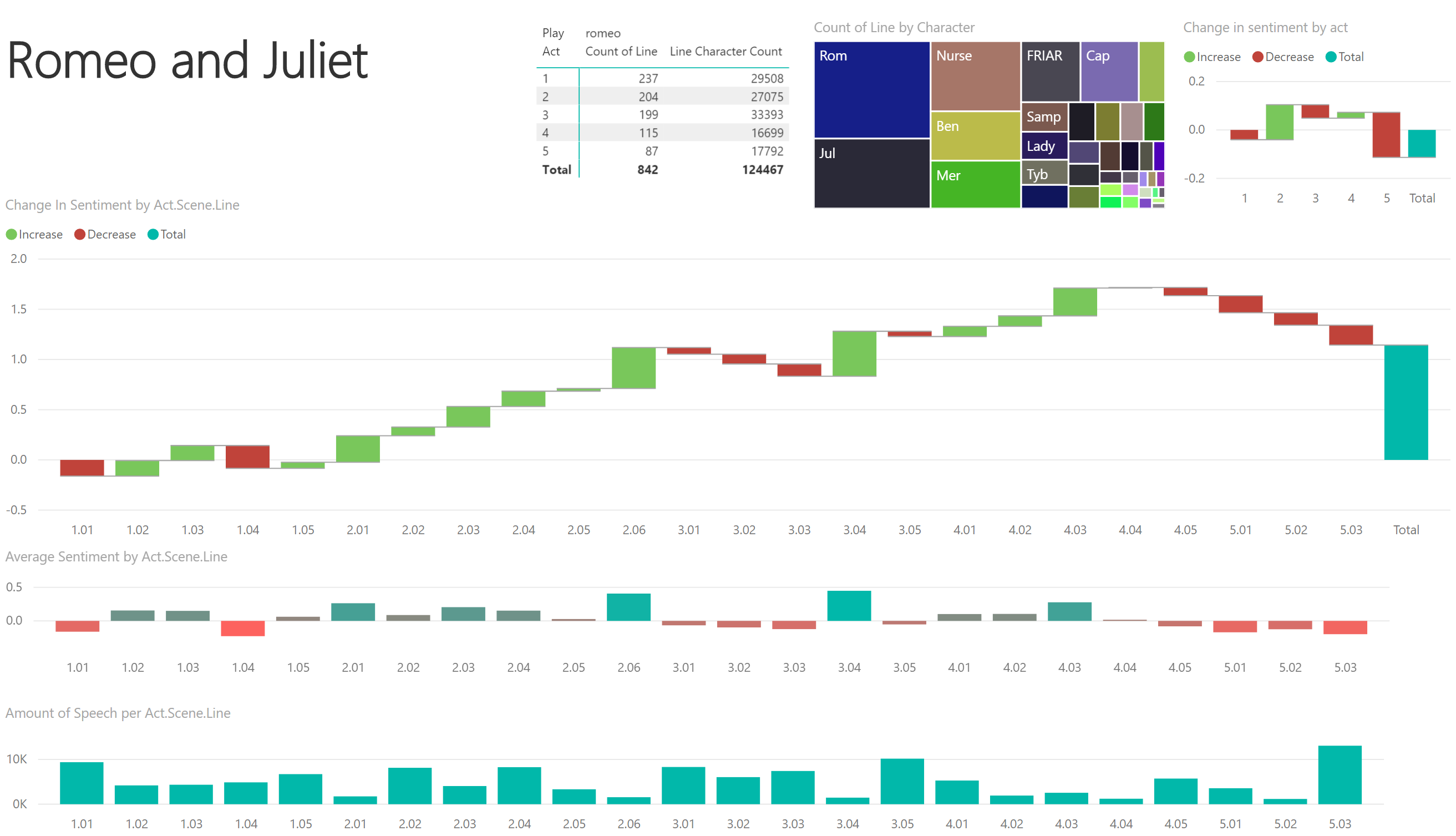

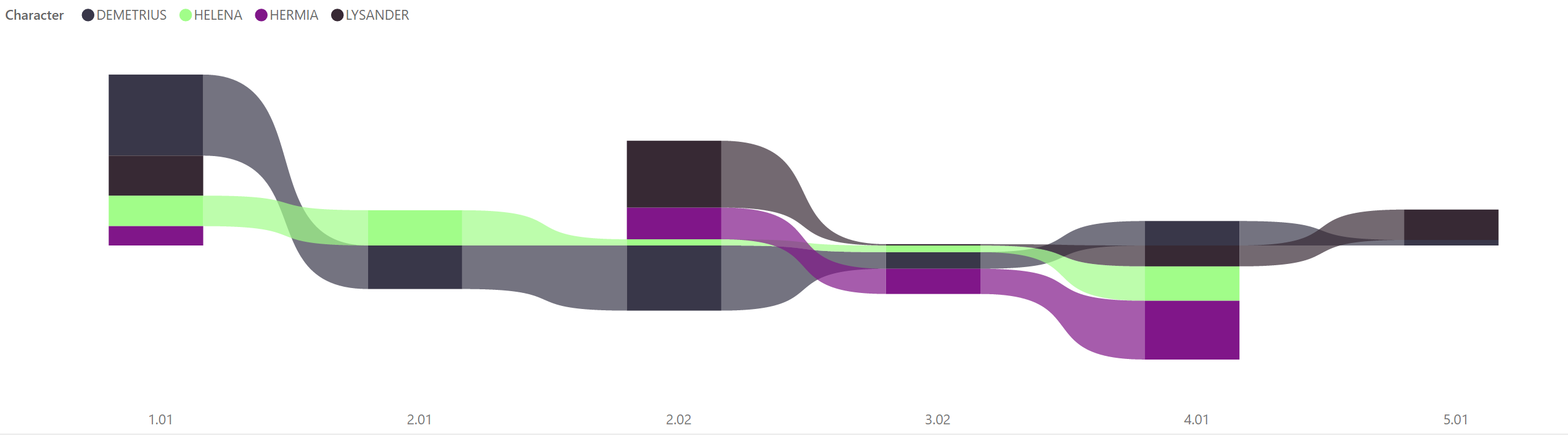

Here are some examples of the results in Power BI. Do they fit what you remember of the plays?

Using various charts you can explore the interactions of certain characters. For example Helena and Hermia in ‘A Midsummer Nights Dream’ and their love confusion.

Leave a comment